As artificial intelligence (AI) is constantly on the revolutionize software development, AI-powered code generator are becoming increasingly sophisticated. These tools have the potential to expedite typically the coding process simply by generating functional program code snippets or entire applications from little human input. Nevertheless, with this particular rise in automation comes the particular challenge of making sure the reliability, transparency, and accuracy associated with the code made. This is exactly where test observability performs a crucial role.

Test observability refers to the ability to grasp, monitor, plus analyze the behaviour of tests in a system. With regard to AI code generators, test observability is essential in ensuring that the generated program code meets quality requirements and functions since expected. In this post, we’ll discuss best practices regarding ensuring robust analyze observability in AI code generators.

just one. Establish Clear Screening Goals and Metrics

Before delving in to the technical areas of test observability, it is very important define what “success” looks like with regard to tests in AI code generation devices. Setting clear tests goals allows a person to identify the proper metrics that want to be noticed, monitored, and noted on during the particular testing process.

Key Metrics for AJE Code Generators:

Program code Accuracy: Measure the degree to which the AI-generated code fits the expected functionality.

Test Coverage: Ensure that all aspects associated with the generated computer code are tested, including edge cases plus non-functional requirements.

Error Detection: Track the system’s ability to be able to detect and handle bugs, vulnerabilities, or perhaps performance bottlenecks.

Setup Performance: Monitor the efficiency and velocity of generated signal under different situations.

By establishing these metrics, teams may create test instances that target particular aspects of code overall performance and functionality, enhancing observability and the overall reliability regarding the output.

a couple of. Implement Comprehensive Working Mechanisms

Observability heavily depends on getting detailed logs associated with system behavior during both the code era and testing phases. Comprehensive logging components allow developers to be able to trace errors, sudden behaviors, and bottlenecks, providing a approach to dive deep into the “why” behind a new test’s success or perhaps failure.

Guidelines for Logging:

Granular Records: Implement logging at various levels of the AJE pipeline. This consists of visiting data input, end result, intermediate decision-making ways (like code suggestions), and post-generation opinions.

Tagging Logs: Add context to logs, such as which specific algorithm or even model version produced the code. This particular ensures you can trace issues back again to their beginning.

Error and satisfaction Wood logs: Ensure logs catch both error messages and performance metrics, such as the time delivered to create and execute program code.

By collecting considerable logs, you generate a rich supply of data that can easily be used to analyze the entire lifecycle of code era and testing, improving both visibility and even troubleshooting.

3. Systemize Tests with CI/CD Sewerlines

Automated screening plays a crucial role in AJE code generation systems, allowing for typically the continuous evaluation involving code quality at every step of enhancement. CI/CD (Continuous Incorporation and Continuous Delivery) pipelines make it possible to quickly trigger test instances on new AI-generated code, reducing the manual effort necessary to ensure program code quality.

How CI/CD Enhances Observability:

Current Feedback: Automated assessments immediately identify issues with generated code, bettering detection and response times.

Consistent Test Setup: By automating tests, you guarantee of which tests are work in a consistent surroundings together with the same test out data, reducing variance and improving observability.

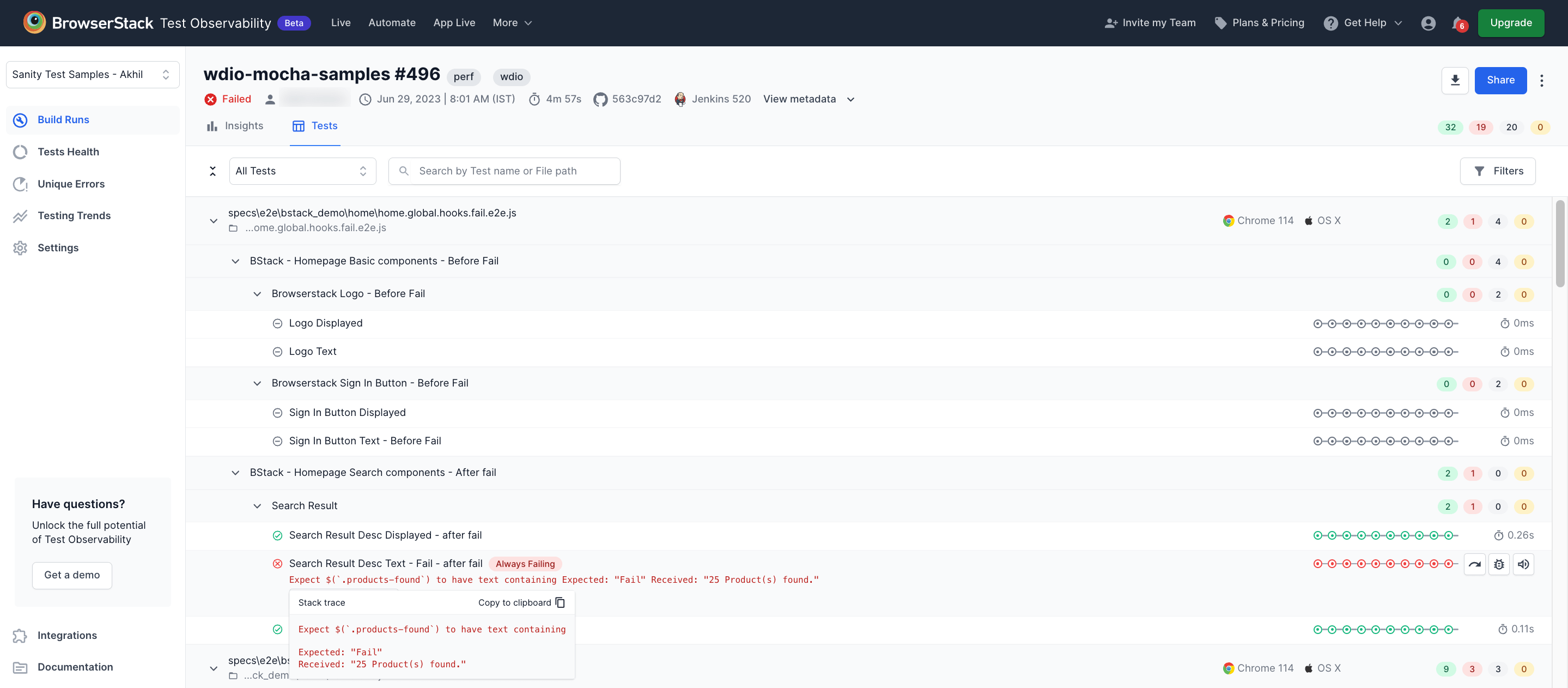

Test Result Dashes: CI/CD pipelines could include dashboards that aggregate test results in real-time, supplying clear insights to the overall health plus performance with the AI code generator.

Robotizing tests also guarantees that even typically the smallest code adjustments (such as a model update or algorithm tweak) usually are rigorously tested, increasing the system’s capacity to observe plus respond to potential issues.

4. Influence Synthetic Test Data

In traditional computer software testing, real-world information is normally used in order to ensure that computer code behaves as anticipated under normal conditions. However, AI code generators can gain from the make use of of synthetic files to test advantage cases and unusual conditions that may possibly not commonly seem in production conditions.

Benefits of Manufactured Data for Observability:

Diverse Test Situations: Synthetic data enables you to craft specific situations designed to test various aspects regarding the AI-generated computer code, such as it is ability to manage edge cases, scalability issues, or safety measures vulnerabilities.

Controlled Tests Environments: Since artificial data is artificially created, it offers complete control of type variables, making it easier to identify how specific inputs impact the particular generated code’s behavior.

Predictable Outcomes: By simply knowing the anticipated results of synthetic check cases, you can easily quickly observe plus evaluate whether typically the generated code reacts as it should throughout different contexts.

Making use of synthetic data not only improves analyze coverage but also enhances the observability regarding how well the particular AI code electrical generator handles non-standard or even unexpected inputs.

five. Instrument Code intended for Observability from the Ground Up

For meaningful observability, it is important to instrument the AI code era system and the particular generated code by itself with monitoring tow hooks, trace points, in addition to alerts. This ensures that tests may directly track how different components regarding the device behave during code generation and execution.

Key Instrumentation Practices:

Monitoring Tow hooks in Code Generation devices: Add hooks throughout the AI model’s logic and decision-making process. These tow hooks capture vital data about the generator’s intermediate states, helping you observe precisely why the system developed certain code.

Telemetry in Generated Code: Ensure the generated code includes observability features, such since telemetry points, that will track how the particular code interacts with different system resources (e. g., memory, PROCESSOR, I/O).

Automated Alerts: Set up automatic alerting mechanisms with regard to abnormal test manners, such as test out failures, performance degradation, or security breaches.

By instrumenting each the code generator and the generated code, you boost visibility into the AI system’s operations and will more quickly trace unexpected final results to their main causes.

6. Make Feedback Loops through Test Observability

Analyze observability should not be a visible street. Instead, it is most effective when paired along with feedback loops that allow the AI code generator to master and improve based on observed test results.

Feedback Loop Rendering:

Post-Generation Analysis: Right after tests are performed, analyze the logs and metrics to spot any recurring concerns or trends. Utilize this data to up-date or fine-tune the particular AI models to boost future code generation accuracy.

Test Situation Generation: Based about observed issues, effectively create new analyze cases to explore areas where the particular AI code electrical generator may be underperforming.

Continuous Model Enhancement: Utilize the insights received from test observability to refine typically the training data or perhaps algorithms driving typically the AI system, finally improving the caliber of program code it generates more than time.

This iterative approach helps continuously enhance the AJE code generator, making it better quality, successful, and reliable.

several. Integrate Visualizations for Better Knowing

Lastly, test observability turns into significantly more useful when paired along with meaningful visualizations. Dashboards, graphs, and temperature maps provide user-friendly ways for developers and testers in order to track system overall performance, identify anomalies, plus monitor test insurance.

Visualization Tools intended for Observability:

Test Protection Heat Maps: Visualize the areas from the generated code which are most frequently or perhaps rarely tested, supporting you identify gaps in testing.

Error Trend Graphs: Chart the frequency plus type of mistakes over time, producing it an easy task to observe improvement or regression in code high quality.

Performance Metrics Dashboards: Use real-time dashes to track crucial performance metrics (e. g., execution moment, resource utilization) in addition to monitor how changes in the AI code power generator impact these metrics.

Visual representations regarding test observability data can quickly attract attention to critical regions, accelerating troubleshooting and making certain tests are usually as comprehensive while possible.

Bottom line

Ensuring test observability in AI code power generators is a complex process that requires setting clear targets, implementing robust signing, automating tests, utilizing synthetic data, and even building feedback coils. Using these ideal practices, developers can significantly grow their ability to monitor, know, and improve the performance of AI-generated code.

As Discover More become more prevalent throughout software development workflows, ensuring test observability will be key to maintaining high-quality criteria and preventing sudden failures or weaknesses in the generated code. By investing in these methods, organizations can fully unlock the possible of AI-powered advancement tools.